In my previous blog My Personal IRC Renaissance I explained how and why I’ve recently started using IRC more. One of the things that I think keeps IRC relevant is its simplicity. The simplicity lends itself to hackability and experimentation. It’s great fun! The simplicity can also sometimes be a detriment. In our modern internet world we expect applications to store information and give it to us on-demand. IRC doesn’t work like that. It is as real-time as real-time gets. If you’re not logged in, you don’t see your messages. Running a ZNC Bouncer is one way to layer on some features to IRC that make it feel like a more modern application. ZNC stays logged into IRC for you, and you can connect to it from multiple IRC clients. The service buffes and replays messages you missed since your last connection.

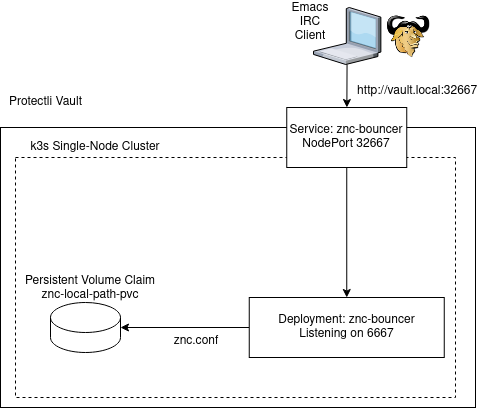

For a while I was running ZNC on a Google Cloud Platform VM. Instructions and resources for how to do so are here, on the System Crafters Wiki. This solution worked for me for about a month, but since I’m not using that server for anything else it’s quite a price tag to pay just to run a ZNC service. There are lots of cheaper hosting solutions, but I decided instead to run ZNC inside my local home network where it’s not exposed to the broader internet. This is also inexpensive because I’m leveraging a Protectli Vault Mini-PC that I already own which is acting as the master node of a small Kubernetes cluster via k3s.

- Implementation

- Connecting from ERC

Implementation

Overview

Update: See my blog IRC Services at Home with k3s

Persistent Volume for ZNC Config

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: znc-local-path-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 200Ki

A Persistent Volume holds data after a deployment or pod is gone. It is used here to supply a permanent location for the /znc-data directory that the ZNC application works with. The ZNC webadmin itself interacts directly with a file /znc-data/configs/znc.conf. This is like the database for ZNC. It holds all information about your servers, nicknames, and configured options. Because this is a dynamic config, it changes as you use the ZNC web app, it would be a poor fit for a k8s configmap object. It needs to be updated while the pod runs. The PVC was the best solution I could come up with. Let me know if you come up with anything better!

ZNC Deployment

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: znc-bouncer

name: znc-bouncer

spec:

replicas: 1

selector:

matchLabels:

app: znc-bouncer

template:

metadata:

labels:

app: znc-bouncer

spec:

volumes:

- name: znc-config

persistentVolumeClaim:

claimName: znc-local-path-pvc

initContainers:

- name: init-config

image: znc:1.8.2

command: ['/bin/sh', '-c', "until [ -f /znc-data/configs/znc.conf ]; do echo waiting for znc.conf; sleep 2; done"]

volumeMounts:

- mountPath: /znc-data

name: znc-config

containers:

- name: znc

image: znc:1.8.2

ports:

- containerPort: 6667

volumeMounts:

- mountPath: /znc-data

name: znc-config

Probably not the simplest deployment file you’ve seen. There’s a few things to go over here.

- The

volumesandvolumeMountsare staking a claim on ourpvdisk space in order to store the/znc-dataconfigs. - There is an

initContainerssection that defines a container namedinit-config, more about that below because it really ties everything together. - The

znccontainer is straightforward, listening for connections on port6667.

What’s up with init-config. Well, ZNC relies on you having a znc.conf in place before the service starts. It also has a command line option znc --makeconf that it expects you to run in order to interactively make that configuration file (and other important files). The init-config container is running a loop checking that the znc.conf file has been made and is in the persistent volume. The pod won’t complete it’s startup until znc --makeconf has been run. If, on the other hand the file has already been made, the init-config will be short lived.

The first time you start this deployment you’ll see something like this:

[nackjicholson@vault ~]$ k get po

NAME READY STATUS RESTARTS AGE

znc-bouncer-XXXXXXXXXX-YYYYY 0/1 Init:0/1 0 84s

It is waiting for you to make the znc.conf. So how do you do that? Get in the container and do it yourself!

[nackjicholson@vault ~]$ k exec -it znc-bouncer-XXXXXXXXXX-YYYYY -c init-config -- ./entrypoint.sh --makeconf

[WARN tini (64)] Tini is not running as PID 1 and isn't registered as a child subreaper.

Zombie processes will not be re-parented to Tini, so zombie reaping won't work.

To fix the problem, use the -s option or set the environment variable TINI_SUBREAPER to register Tini as a child subreaper, or run Tini as PID 1.

[ .. ] Checking for list of available modules...

[ ** ]

[ ** ] -- Global settings --

[ ** ]

[ ?? ] Listen on port (1025 to 65534): 6667

[ !! ] WARNING: Some web browsers reject ports 6667 and 6697. If you intend to

[ !! ] use ZNC's web interface, you might want to use another port.

[ ?? ] Proceed anyway? (yes/no) [yes]: yes

[ ?? ] Listen using SSL (yes/no) [no]:

[ ?? ] Listen using both IPv4 and IPv6 (yes/no) [yes]:

[ .. ] Verifying the listener...

[ ** ] Unable to locate pem file: [/znc-data/znc.pem], creating it

[ .. ] Writing Pem file [/znc-data/znc.pem]...

[ ** ] Enabled global modules [webadmin]

[ ** ]

[ ** ] -- Admin user settings --

[ ** ]

[ ?? ] Username (alphanumeric): myuser

[ ?? ] Enter password: MY_ZNC_PASSWORD

[ ?? ] Confirm password: MY_ZNC_PASSWORD

[ ?? ] Nick [myuser]: mynick

[ ?? ] Alternate nick [mynick_]:

[ ?? ] Ident [myuser]: mynick

[ ?? ] Real name (optional): First Last

[ ?? ] Bind host (optional):

[ ** ] Enabled user modules [chansaver, controlpanel]

[ ** ]

[ ?? ] Set up a network? (yes/no) [yes]:

[ ** ]

[ ** ] -- Network settings --

[ ** ]

[ ?? ] Name [freenode]: libera

[ ?? ] Server host (host only): irc.libera.chat

[ ?? ] Server uses SSL? (yes/no) [no]: yes

[ ?? ] Server port (1 to 65535) [6697]:

[ ?? ] Server password (probably empty): MY_LIBERA_PASSWORD

[ ?? ] Initial channels: #systemcrafters

[ ** ] Enabled network modules [simple_away]

[ ** ]

[ .. ] Writing config [/znc-data/configs/znc.conf]...

[ ** ]

[ ** ] To connect to this ZNC you need to connect to it as your IRC server

[ ** ] using the port that you supplied. You have to supply your login info

[ ** ] as the IRC server password like this: user/network:pass.

[ ** ]

[ ** ] Try something like this in your IRC client...

[ ** ] /server <znc_server_ip> 6667 myuser:<pass>

[ ** ]

[ ** ] To manage settings, users and networks, point your web browser to

[ ** ] http://<znc_server_ip>:6667/

As soon as that’s done, the init-config containers original process sees the newly created /znc-data/configs/znc.conf file and kills itself.

ZNC NodePort Service

---

apiVersion: v1

kind: Service

metadata:

labels:

app: znc-bouncer

name: znc-bouncer

spec:

ports:

- name: znc-app

port: 6667

protocol: TCP

targetPort: 6667

nodePort: 32667

selector:

app: znc-bouncer

type: NodePort

This is pretty straightforward, runs a NodePort service exposing port 32667 and forwards it to our deployment listening on the targetPort: 6667. From my diagram above this is what let’s us connect to the server using the http://vault.local:32667 URI.

I fiddled around with using an Ingress to do this, but IRC clients typically ask for a host and port i.e. znc.vault.local and I could not figure out how to get an Ingress and my local mDNS to allow me to find the vault using a URL like znc.vault.local. If you have found a better solution to this, please let me know, /query nackjicholson on Libera.

Update: I found a way to do this with Ingress, see IRC Services at Home with k3s.

Connecting from ERC

My go-to IRC client is ERC within Emacs, you can probably apply the same idea to whatever client you use.

One time:

(erc :server "vault.local" :port 32667 :nick "mynick" :password "myuser/libera:MY_ZNC_PASSWORD")

If you’re going to load that in your init.el you’d likely want something like this, and to leverage the auth-source module in emacs to load your password securely.

init.el

(defun my--start-erc ()

(interactive)

;; ZNC

(erc :server "vault.local" :port 32667 :nick "mynick"))

.authinfo.gpg

machine vault.local login mynick port 32667 password myuser/libera:MY_ZNC_PASSWORD

Conclusion

I truly hope you’ll find that running your own ZNC on Kubernetes is a great learning experience, and works quite well! Be well, see you on irc.libera.chat!