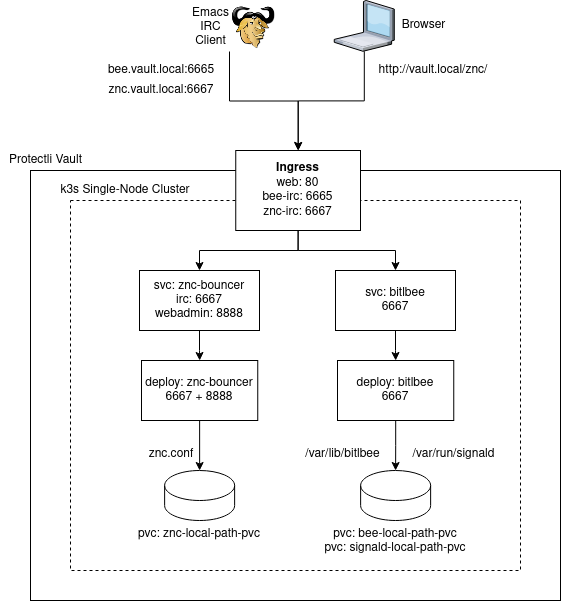

This is a follow-on to my previous two blogs about running ZNC and Bitlbee in k3s/Docker.

In my prior k3s blog I ran a NodePort Kubernetes Service because I was unable to figure out how to get an Ingress setup and how to run ZNC behind a reverse proxy. I’ve figured that out now. It wasn’t entirely simple because there were a few non-obvious things that needed to be undestood.

- IRC needs to be proxied via TCP not HTTP, and ZNC should take that traffic separately.

- Host Server Name Indication (HostSNI) only works if you’re using TLS, so routing by subdomains only works if you’re bothering with SSL/TLS.

- ZNC Configuration for URIPrefix allows ZNC’s webadmin to serve assets while behind a reverse proxy

- The way to configure k3s addons like Traefik, is to drop their configuration in a server folder.

- Subdomains on a local network with mDNS is possible but not easy, and you should get on with your life. Edit your

/etc/hostsmy friend.192.168.X.Y znc.vault.local bee.vault.local

Here’s what I have now. I’m using a Protectli Vault Mini-PC but I believe this whole thing could easily be run on a raspbery pi or whatever else you have lying around the house. :)

The steps described in detail in my previous two blogs will still be helpful in getting the services configured and initialized. Rather than repeat it all again, I’m going to share the YAML and let you explore. Feel free to ask me questions if you’re trying to setup something similar for yourself.

/var/lib/rancher/k3s/server/manifests/traefik.yaml

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: traefik-crd

namespace: kube-system

spec:

chart: https://%{KUBERNETES_API}%/static/charts/traefik-crd-9.18.2.tgz

---

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: traefik

namespace: kube-system

spec:

chart: https://%{KUBERNETES_API}%/static/charts/traefik-9.18.2.tgz

set:

global.systemDefaultRegistry: ""

valuesContent: |-

rbac:

enabled: true

ports:

bee-irc:

port: 6665

expose: true

expoosedPort: 6665

protocol: TCP

znc-irc:

port: 6667

expose: true

exposedPort: 6667

protocol: TCP

websecure:

tls:

enabled: true

podAnnotations:

prometheus.io/port: "8082"

prometheus.io/scrape: "true"

providers:

kubernetesIngress:

publishedService:

enabled: true

priorityClassName: "system-cluster-critical"

image:

name: "rancher/library-traefik"

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

traefik-ingress/base/routes.yaml

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRouteTCP

metadata:

name: znc-routes

namespace: default

spec:

entryPoints:

- znc-irc

routes:

- kind: Rule

match: HostSNI(`*`)

services:

- kind: Service

name: znc-bouncer

namespace: default

port: 6667

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRouteTCP

metadata:

name: bee-routes

namespace: default

spec:

entryPoints:

- bee-irc

routes:

- kind: Rule

match: HostSNI(`*`)

services:

- kind: Service

name: bitlbee

namespace: default

port: 6667

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: web-routes

namespace: default

spec:

entryPoints:

- web

routes:

- kind: Rule

match: Host(`vault.local`) && PathPrefix(`/znc/`)

services:

- kind: Service

name: znc-bouncer

namespace: default

port: 8888

bitlbee/base/service.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: bitlbee

name: bitlbee

spec:

ports:

- name: bitlbee-irc

port: 6667

protocol: TCP

targetPort: 6667

selector:

app: bitlbee

type: ClusterIP

bitlbee/base/pvc-bitlbee.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: bitlbee-local-path-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 1000Ki

bitlbee/base/pvc-signald.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: signald-local-path-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 1000Ki

bitlbee/base/deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: bitlbee

name: bitlbee

spec:

replicas: 1

selector:

matchLabels:

app: bitlbee

template:

metadata:

labels:

app: bitlbee

spec:

volumes:

- name: bitlbee-data

persistentVolumeClaim:

claimName: bitlbee-local-path-pvc

- name: signald-socket

persistentVolumeClaim:

claimName: signald-local-path-pvc

initContainers:

- name: volume-mount-hack

image: ezkrg/bitlbee-libpurple:latest

command: ["sh", "-c", "chmod -R 777 /var/lib/bitlbee && chmod -R 777 /var/run/signald"]

volumeMounts:

- name: bitlbee-data

mountPath: /var/lib/bitlbee

- name: signald-socket

mountPath: /var/run/signald

containers:

- name: signald

image: finn/signald:latest

volumeMounts:

- mountPath: /signald

name: signald-socket

- name: bitlbee

image: ezkrg/bitlbee-libpurple:latest

ports:

- containerPort: 6667

volumeMounts:

- mountPath: /var/run/signald

name: signald-socket

- mountPath: /var/lib/bitlbee

name: bitlbee-data

znc/base/service.yaml

---

apiVersion: v1

kind: Service

metadata:

labels:

app: znc-bouncer

name: znc-bouncer

spec:

ports:

- name: znc-irc

port: 6667

protocol: TCP

targetPort: 6667

- name: znc-webadmin

port: 8888

protocol: TCP

targetPort: 8888

selector:

app: znc-bouncer

type: ClusterIP

znc/base/pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: znc-local-path-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

storageClassName: local-path

resources:

requests:

storage: 200Ki

znc/base/deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: znc-bouncer

name: znc-bouncer

spec:

replicas: 1

selector:

matchLabels:

app: znc-bouncer

template:

metadata:

labels:

app: znc-bouncer

spec:

volumes:

- name: znc-config

persistentVolumeClaim:

claimName: znc-local-path-pvc

initContainers:

- name: init-config

image: znc:1.8.2

command: ['/bin/sh', '-c', "until [ -f /znc-data/configs/znc.conf ]; do echo waiting for znc.conf; sleep 2; done"]

volumeMounts:

- mountPath: /znc-data

name: znc-config

containers:

- name: znc

image: znc:1.8.2

ports:

- name: znc-irc

containerPort: 6667

- name: znc-webadmin

containerPort: 8888

volumeMounts:

- mountPath: /znc-data

name: znc-config

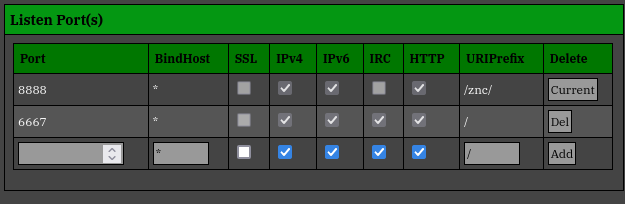

ZNC Webadmin Listeners